At Third Key AI, we’re constantly looking at the horizon of security threats. The rapid integration of AI and Large Language Models into enterprise environments has created a landscape of new, subtle, and largely unexplored attack surfaces. One of the most fascinating and concerning of these is the potential for AI’s own infrastructure to be turned against itself.

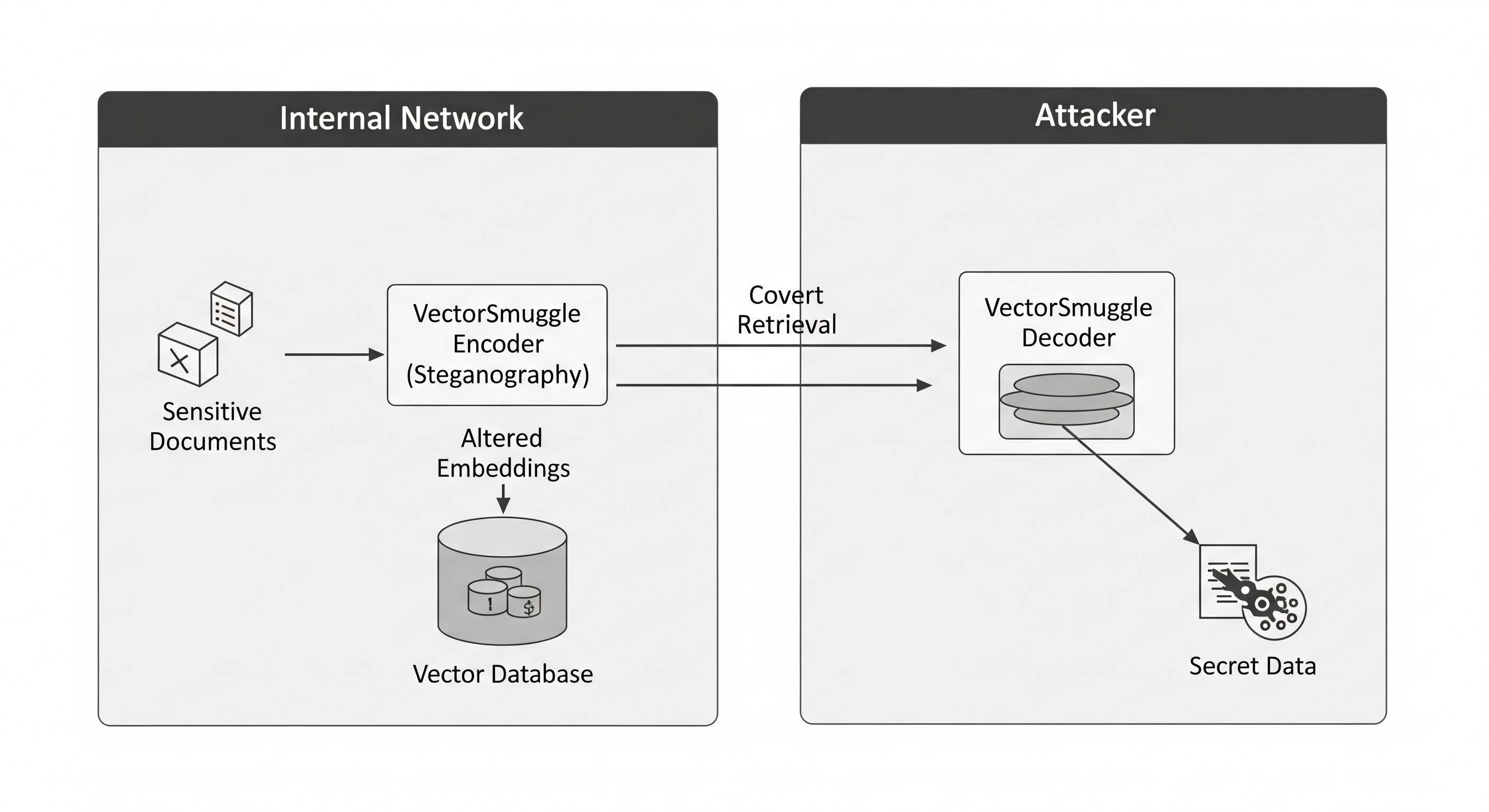

Today, we’re dissecting a novel technique that does just that: VectorSmuggle, a proof-of-concept framework that demonstrates how to use the vector embeddings at the heart of modern AI as a covert channel for data exfiltration.

The Blind Spot in the AI Pipeline

Modern Retrieval-Augmented Generation (RAG) systems work by converting massive amounts of text into a numerical representation called “vector embeddings.” Think of an embedding as a high-dimensional coordinate—a point on a complex map that represents the semantic meaning of a piece of data. These embeddings are stored in a vector database, which the AI queries to find relevant information.

Traditional security tools, like Data Loss Prevention (DLP) systems, are trained to look for sensitive data in emails, file transfers, and USB drives. They aren’t looking for secrets hidden in the subtle mathematical properties of millions of vectors being indexed by an AI. This is the blind spot VectorSmuggle was built to explore.

How to Smuggle a Secret Inside a Vector

The core idea is a sophisticated form of steganography, the art of hiding messages in plain sight. But unlike traditional methods that flip the least significant bits in an image pixel, VectorSmuggle uses techniques tailored for the floating-point, high-dimensional nature of embeddings.

The research outlines several methods:

- Rotation: The vector (our point on the map) is slightly rotated. The precise angle and axis of this tiny rotation encode the hidden data.

- Scaling: The vector is made fractionally longer or shorter. The exact scaling factor is used to represent the secret information.

- Offset: The vector is shifted by an almost imperceptible amount. The direction and distance of this shift contain the hidden message.

The key to all these methods is semantic fidelity. The changes are so mathematically subtle that the “meaning” of the vector remains intact. In experiments using the Enron email dataset, the manipulated embeddings maintained a cosine similarity of over 0.98 with the originals. To the AI system, everything appears normal. But to an attacker who knows the code, these vectors are carrying a hidden payload.

The Threat Model: An Insider’s Game

VectorSmuggle isn’t about an external hacker breaking in through the AI. The threat model assumes an adversary—a malicious insider or a compromised component—that already has access to internal documents and the ability to trigger the embedding pipeline.

By leveraging this position, the attacker can:

- Encode: Take sensitive data (e.g., financial reports, private keys).

- Embed & Obfuscate: Use VectorSmuggle to hide this data inside the embeddings of hundreds of non-sensitive documents.

- Index: Let the AI do its job, indexing these altered embeddings into the vector database.

- Exfiltrate: Later, the attacker can retrieve these embeddings and decode the hidden message, bypassing all traditional security perimeters.

A Tool for Research and Defense

The VectorSmuggle project isn’t just an upcoming paper; it’s a comprehensive, open-source framework built for security professionals. By demonstrating a viable attack, it provides red teams with a new vector to test and blue teams with a clear mandate to build new defenses.

The research shows this technique is not just theoretical. It achieves a significant data-hiding capacity and, crucially, an 88% evasion rate against a standard anomaly detector.

Defending against this requires a new mindset:

- Behavioral Monitoring: Watch for anomalous patterns in how and when data is being embedded.

- Statistical Analysis: Monitor the vector database itself for subtle statistical shifts that could indicate manipulation.

- Strict Access Controls: Enforce the principle of least privilege on the embedding pipeline and the vector store.

The age of AI demands that we evolve our security practices. VectorSmuggle is a stark reminder that the biggest threats can sometimes come from the places we least expect—not by breaking the system, but by using it exactly as it was designed.

For a deeper dive, check out the full research and toolset on the VectorSmuggle GitHub repository.